Snapshot isolation in PostgreSQL

Intro

No matter whether your application operates on a common RDBMS or a bleeding-edge NoSQL database you still need to cater for proper isolation of operations done by your end users.

Although the trade-offs made by the creators of each database can be slightly different the mechanics and limitations stay the same. If you decide to spend time to better understand the basic concepts and possible strategies for implementing isolation then later it can make your life much easier when you need to quickly get up to speed with a new database.

In this article we will take a look at how PostgreSQL solves the isolation problem by leveraging optimistic approach to locking.

The problem

Even the most trivial-sounding problems require putting some thought into how different isolation levels are implemented in a particular database. Take for example the classic problem of debit and credit in an account.

Let’s start with something simple. Suppose we just want to add 100$ to Bob’s account. We could write SQL code so that we first fetch the current value:

SELECT balance FROM accounts WHERE owner = 'Bob'; -- balance: 500$…and then increment it by 100. Assuming the returned balance was 500 we just need to add 100:

UPDATE accounts

SET balance = 600 -- 500$ + 100$

WHERE owner = 'Bob';Lost updates

There is, however, a potential problem that other transaction could jump in-between and some data would get lost. It means that an overlapping transaction aiming to increase Bob’s balance by 300$ could finish successfully, only that Bob would not receive his money.

-- TRANSACTION #1

SELECT balance

FROM accounts

WHERE owner = 'Bob';

-- balance: 500$

UPDATE accounts

SET balance = 600

WHERE owner = 'Bob';

COMMIT;

-- balance: 600$

-- (300$ was lost)-- TRANSACTION #2

SELECT balance

FROM accounts

WHERE owner = 'Bob';

-- balance: 500$

UPDATE accounts

SET balance = 800

WHERE owner = 'Bob';

COMMIT;

-- balance: 800$

-- balance: should be 900$

-- (500$ + 300$ + 100$)Is this an isolation level problem?

According to SQL standard there are 4 isolation levels to pick from and it is quite common in relational databases to have READ COMMITTED as the default isolation mode.

And if you stick to the default isolation level in PostgreSQL you might run into the exact problem we have just experienced because it happens only in READ UNCOMMITTED and READ COMMITTED.

One way to overcome this is to just raise the isolation level to REPEATABLE READS to prevent losing updates.

When you run the following code in PostgreSQL one of interleaving transactions will crash and it will need to be manually retried from your application:

BEGIN ISOLATION LEVEL REPEATABLE READ;

SELECT balance

FROM accounts

WHERE owner = 'Bob';

UPDATE accounts

SET balance = ...

WHERE owner = 'Bob';

-- it will crash here if Bob's account has been modified

-- since the beginning of this transaction

COMMIT;If you are curious this is the error that will be thrown:

ERROR: could not serialize access due to concurrent update

SQL state: 40001But how was this implemented in PostgreSQL? How did PostgreSQL realise that a record was modified from another transaction?

Snapshot isolation

A popular solution to this problem is called snapshot isolation. When working in REPEATABLE READ mode, at the beginning of each transaction PostgreSQL takes a consistent snapshot of the database. When you then query the data you only get records committed up to this instant in time. Later changes are not visible and conflicts result in aborting the transaction (as we have just observed).

Taking a snapshot sounds like a really expensive operation that is going to absolutely kill the performance of PostgreSQL…

MultiVersion Concurrency Control (MVCC)

But actually it is not that terrible thanks to a technique called MVCC, standing for MultiVersion Concurrency Control, which does the job of providing consistent views of the data while keeping the performance in check.

MVCC, as the name suggests, maintains several versions of the same record, meaning instead of overwriting the changed data a new version of the record is created.

Before we get to how PostgreSQL knew it needed to abort the conflicting transaction we need to go through some concepts so please bear with me.

xid

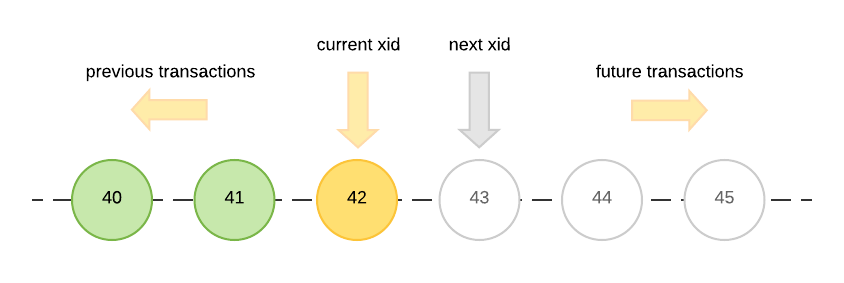

First of all, in order to distinguish transactions, each one of them receives a special identifier, called xid, which is a sequential number uniquely identifying a transaction.

xmin and xmax

Then each version of a record gets additional metadata:

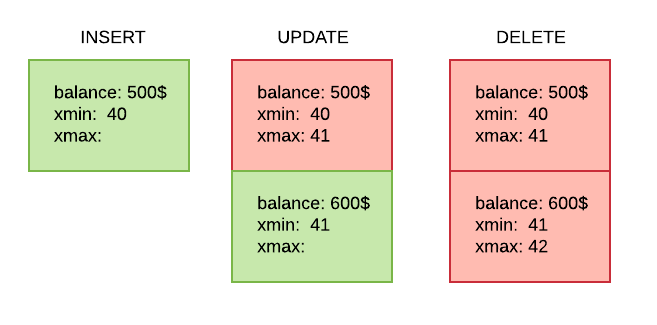

- xmin - xid of the transaction that created the record

- xmax - xid of the transaction that deleted the record

When a new account is INSERTed PostgreSQL creates a record with xmin set to the current transaction id (xid) and leaves xmax empty. If you later decide to UPDATE the account then instead of overwriting the existing record a new one is created. The old record becomes invisible by setting xmax to the transaction that updated it. Finally, if the account was meant to be DELETED then all there is to do is to properly set xmax of the last existing record.

Other in-progress transactions

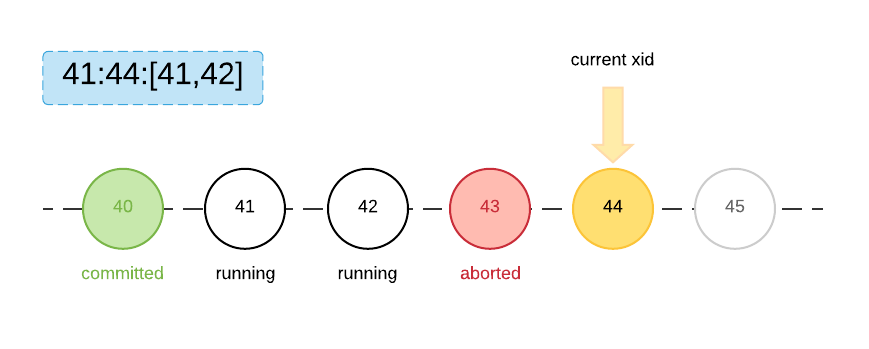

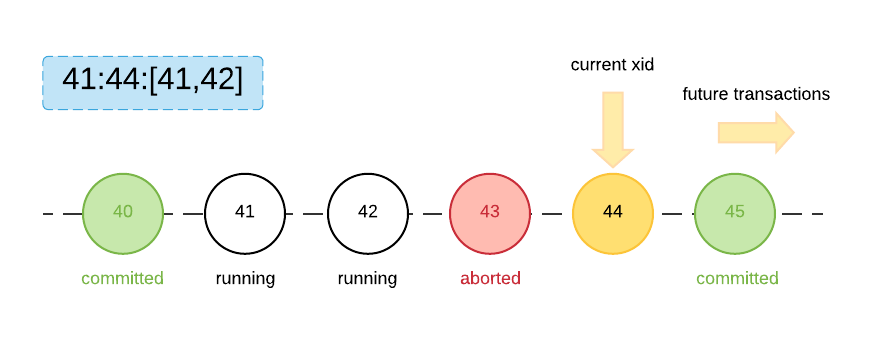

The last piece of the puzzle is information about other active transactions. Whenever PostgreSQL takes a snapshot it collects information about the currently open transactions that have not yet finished. With such information it can later efficiently resolve conflicts because then it can discover that another transaction has just modified the record that the current transaction was about to update. Active transactions are described in PostgreSQL in the following format:

| xmin | : | xmax | : | xip_list |

|---|---|---|---|---|

| earliest active transaction | first as-yet-unassigned transaction | list of in-progress transactions |

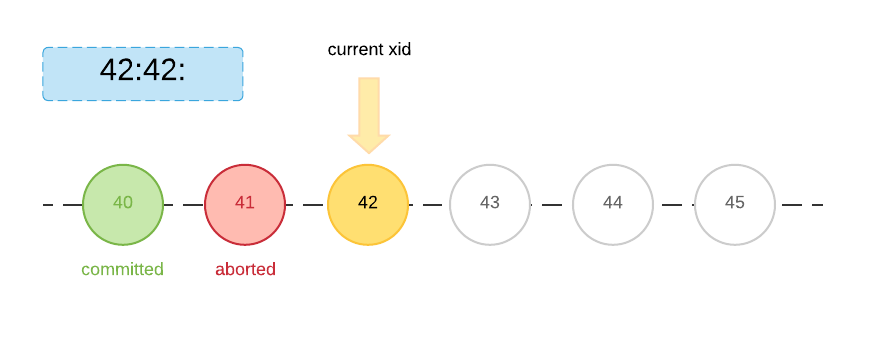

In the simplest case all transactions have already finished (some finished successfully and some were aborted):

But most likely there will be some other transaction(s) currently running in the system:

While the current transaction is still open another transaction could start and then complete before the current one finishes (here xid=45 started after snapshot for xid=44 was taken):

How does it all play together?

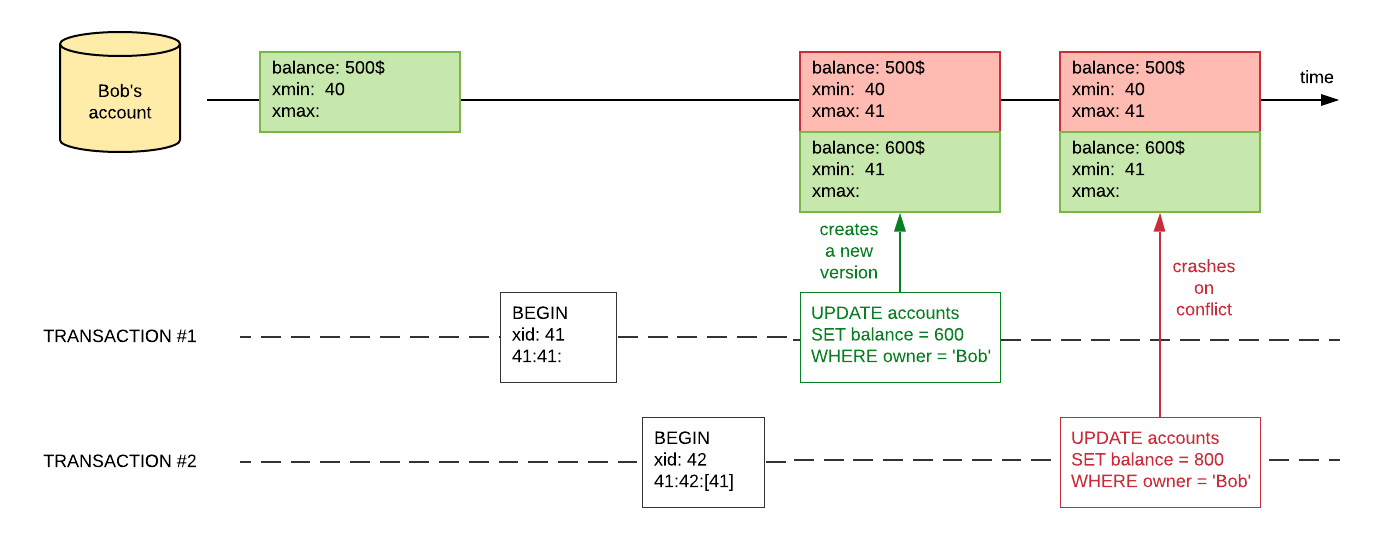

Returning to our example, below you can find a diagram showing how PostgreSQL came to the conclusion that a conflict has occurred and that transaction #2 needs to be aborted:

The first transaction (#1) succeeds which results in a new version of Bob’s account to be created.

But when transaction #2 tries to update Bob’s account PostgreSQL discovers that a new version of the record was created since transaction #2 has began and it can only be resolved by retrying from the application code (and so it crashes).

This was possible to deduce because xid of transaction #1 (xid=41) was on the list of active transactions (41:42:[41]) when transaction #2 started (it means that transaction #1 had to change the record in the meantime).

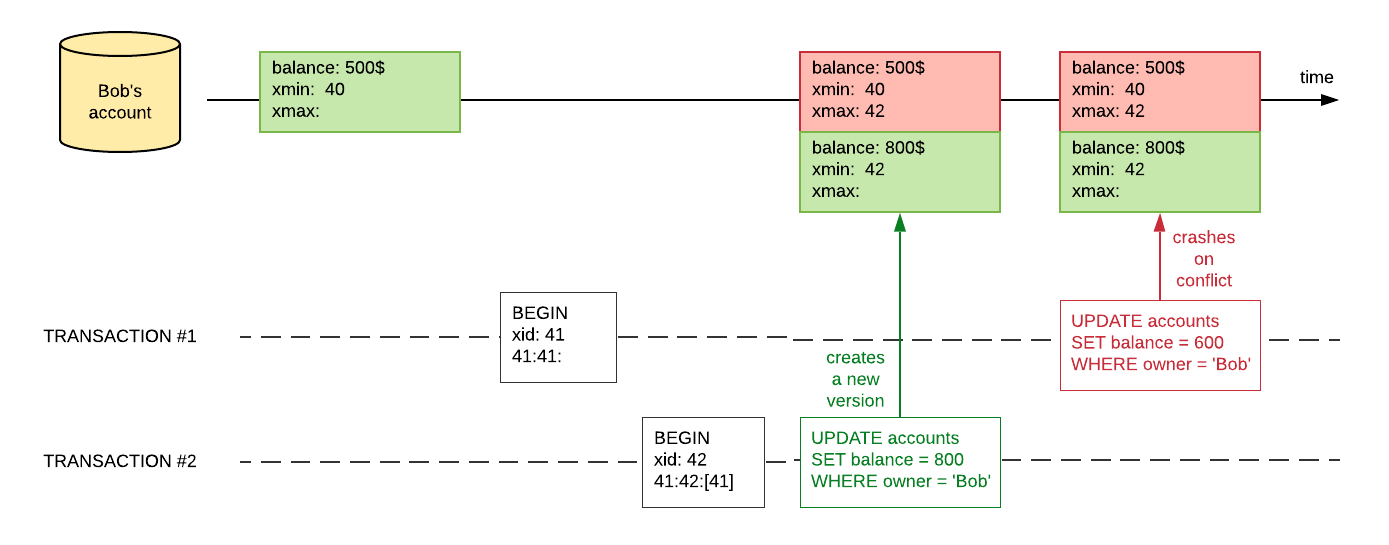

It could also happen that transaction #2 updated sooner than #1:

Transaction #1 figures out there is a conflict because xid=42 is greater than xmax in the snapshot (41:41:) so it had to be updated by a transaction that started later (a future transaction from the standpoint of transaction #1).

This is only a part of the story. PostgreSQL could also realise during UPDATE that another transaction is updating the same record. In that scenario it will wait for this transaction to finish. If the other transaction aborts then it can proceed with its update. Otherwise it needs to fail. First updater wins.

How all possible scenarios are handled is explained here.

Drawbacks of PostgreSQL’s MVCC implementation

As you can see, MVCC is quite a clever technique. On the downside, it obviously takes more disk space because all those additional versions need to be stored somewhere.

The challenge here is to protect the database from bloating caused by the old versions of records that are not used anymore by any transaction but are still taking up the space. There needs to be a process sweeping the database and removing such unnecessary data (think garbage collector for databases). In PostgreSQL such process is called autovacuum (“auto” because you can also run vacuum manually whenever you think is the right moment).

Hypothetically, this could be turned into an advantage if your use case was to store historical data. You could then go back in time and compare values from different points in the past.

Another potential issue is the wraparound of the counter generating sequential transaction identifiers. Counters have limits and eventually they reach their max values. At some point xid will need to roll over and start from zero. If incorrectly set up, or even worse: when disabled, it can hit back in the least expected moment (which can have unpleasant consequences for you and your users).

Displaying balances of two accounts

Going back to the accounts problem, what if now Bob wanted to transfer money to Alice? It would be convenient for him if he could monitor the progress of this action.

While the transfer takes place he would like to see both his and Alice’s balance. Let’s assume for the sake of this example that it is perfectly legal for Bob to see how much money Alice has in her account. How does MVCC comes into play?

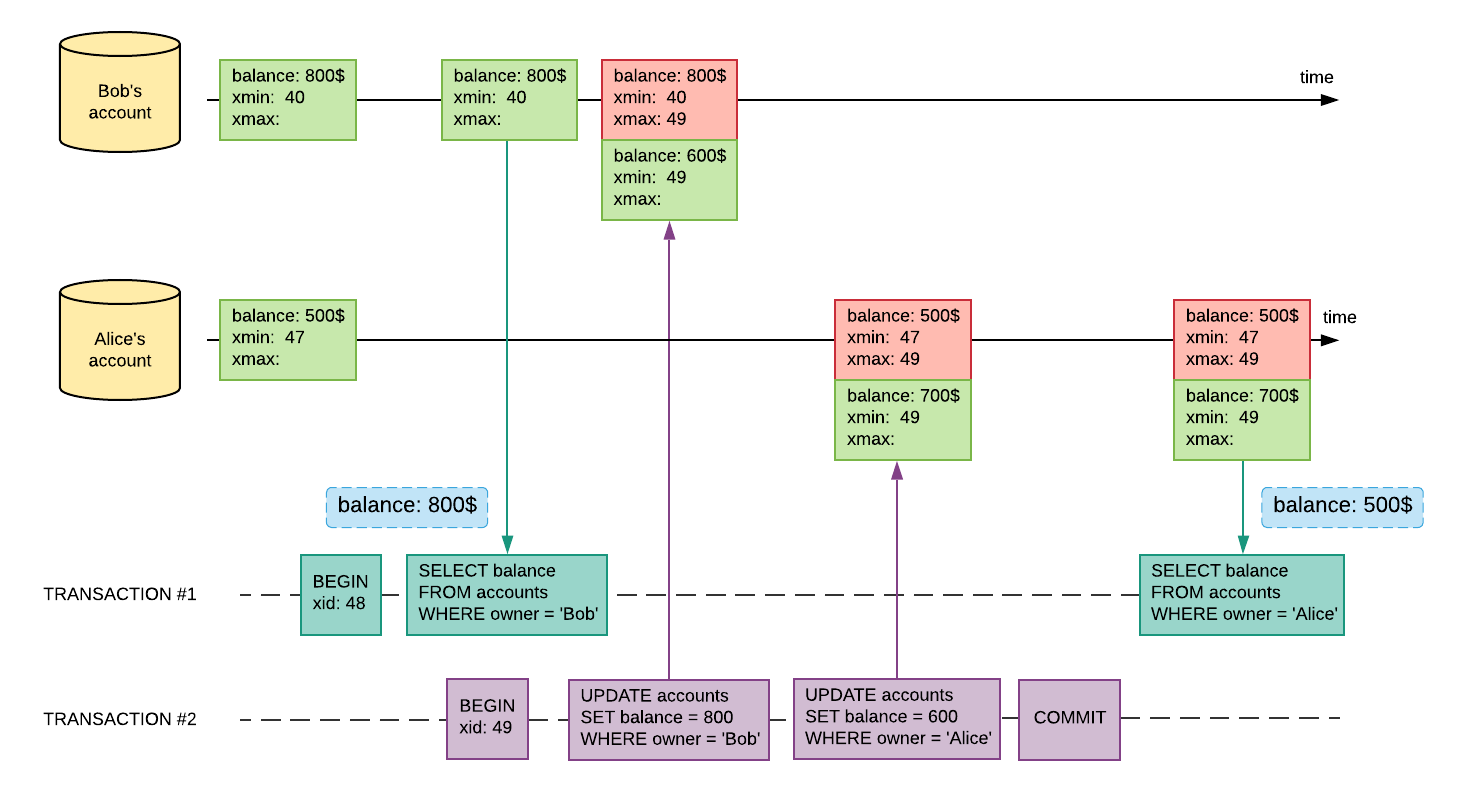

Here is what would happen if a transaction reading balances interleaved with a transaction that updated both accounts:

Transaction #1 (xid=48) starts first and manages to fetch balance of Bob’s account before the second transaction (xid=49) updates this account.

But then transaction #2 updates Alice’s account before transaction #1 gets a chance to check its balance.

Now, depending on the isolation level, transaction #1 either:

- returns the new value (700$) in READ COMMITTED mode, since this is what was literally just “committed” (but then Bob would see inconsistent view of the data)

- …or returns 500$ if in REPEATABLE READ mode (the value is older but it coherently shows a state of the database at some point of time)

In this case, the second option sounds like a more reasonable thing to do. At least it should behave less surprising for Bob.

PostgreSQL can make the distinction which value should be returned because it sees 2 versions of the record:

- balance=500$, xmin=47, xmax=49

- balance=700$, xmin=49

Because PostgreSQL knows the xid of transaction #1 (xid=48) it can make an informed decision whether it should return the most recently committed record updated while it was still running (700$: xmin=49 > xid=48) or return an older version but consistent with the first select (500$: xmin=47 < xid=48 < xmax=49).

Read more

If you have stayed till the end and it made you eager to learn more then Martin Kleppmann’s book, “Designing Data-Intensive Applications”, should be able to satisfy your curiosity. It is a tough and long read but it is definitely worth the time and effort.

Another good source is PostgreSQL documentation. There is a whole chapter that contains a succinct explanation of concurrency problems that could happen and how they were solved in PostgreSQL.

However, you may find those docs a little bit shallow because they are lacking a detailed explanation how each concurrency mechanism was actually implemented. Fortunately, there exists an extensive documentation that will take you on a deep dive on all the intricacies of PostgreSQL internals.