Scaling Kafka

Foreword

In this post we will explore the basic ways how Kafka cluster can grow to handle more load. We will go ‘from zero to hero’ so even if you have never worked with Kafka you should find something useful for yourself.

Basics

Let’s start with basic concepts and build from there.

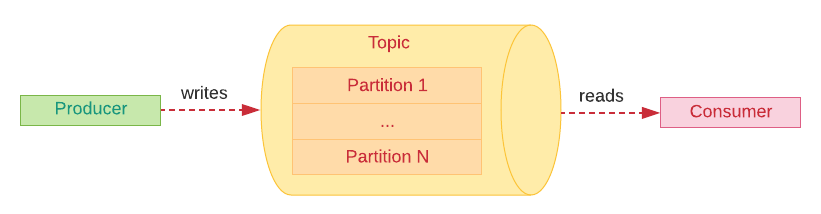

In Kafka producers publish messages to topics from which these messages are read by consumers:

Partitions

If we zoom in we can discover that topics consist of partitions:

Segments

Partitions in turn are split into segments:

You can think of segments as log files on your permanent storage where each segment is a separate file. If we take a closer look we will finally see that segments contain the actual messages sent by producers:

New messages are appended at the end of the last segment, which is called the active segment.

Each message has an offset which uniquely identifies a message on a partition.

Brokers

Going back to the overview, there has to be some entity that handles producers’ and consumers’ requests and this component is called a broker:

A Kafka broker is basically a server handling incoming TCP traffic, meaning either storing messages sent by producers or returning messages requested by consumers.

Multiple partitions

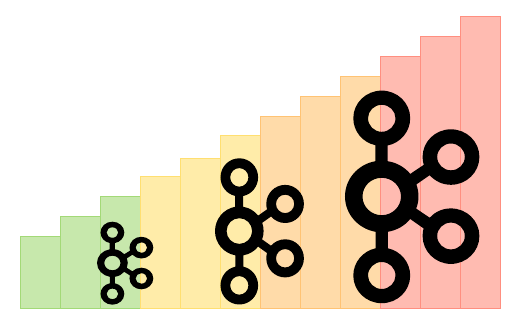

The simplest way your Kafka installation can grow to handle more requests is by increasing the number of partitions:

From the producers perspective this means that it can now simultaneously publish new messages to different partitions:

Messages could potentially be sent from producers running in different threads/processes or even on separate machines:

However, in such case you could consider splitting this big topic into specific sub-topics, because a single consumer can easily read from a list of topics.

Producer implementations try to evenly spread messages across all partitions, but it is also possible to programmatically specify the partition to which a message should be appended:

var producer = new KafkaProducer(...);

var topic = ...;

var key = ...;

var value = ...;

// partition can be handpicked

int partition = 42;

var message = new ProducerRecord<>(topic, partition, key, value);

producer.send(message);More on that can be found in the JavaDocs:

In short, you can pick the partition yourself or rely on the producer to do it for you. Producer will do its best to distribute messages evenly.

Consumers

On the other side we have Kafka consumers. Consumers are services that poll for new messages. Consumers could be running as separate threads, but could be as well totally different processes running on different machines. And physically separating your consumers could actually be a good thing, especially from the high-availability standpoint, because if one machine crashes there is still a consumer to take over the load.

In case of consumers you have to make a similar choice as with producers: you can take control and assign partitions to consumers manually or you can leave the partition assignment to Kafka. The latter means that consumers can subscribe to a topic at any time and Kafka will distribute the partitions between the consumers that joined.

Consumer groups

In this subscription model messages are consumed within consumer groups:

Consumers join consumer groups and Kafka takes care of even distribution of partitions:

Whenever a new consumer joins a consumer group Kafka does rebalancing:

But if there are more consumers than partitions then some consumers will stay idle (i.e. will not be assigned any partition):

It could be beneficial to keep such idle consumers so that when some consumer dies the idle one jumps in and takes over.

Multiple brokers in a cluster

Eventually, you will no longer be able to handle the load with just one broker.

You could then add more partitions, but this time these partitions will be handled by a new broker. And you can scale horizontally in such way as often as you need:

Dedicated clusters or multi-tenancy?

Since now you know the basics of scaling a Kafka cluster, there is one important thing you should take into consideration: how are you going to run Kafka within your organisation?

A more cost-effective approach might be to run a single multi-tenant cluster. The idea here is that all of your company’s applications will connect to this one cluster. Obviously, having just one service to maintain is more manageable and also cheaper from the operational perspective.

But then you need to deal with the ‘noisy neighbour’ problem where one application hammers your Kafka cluster disturbing work of other applications. It requires some more thought in the form of capacity planning and setting up quotas (i.e. limits) on how much resources (e.g. network bandwidth) a particular client can use.

Another option is to spawn a dedicated Kafka cluster handling only requests from a particular application (or a subset of your company’s applications). And actually you may be forced into this solution if you have a critical application that requires better guarantees and more predictability.

Summary

As you can see scaling Kafka is not that complicated. In a nutshell, you just add more partitions or increase the number of servers in your cluster.